Unlock Instant BSL Access: How the SignStream API Brings Real-Time British Sign Language Translation to Your App

In a world where real-time digital experiences are the norm, accessibility must keep pace. For Deaf users, that means more than captions; it means British Sign Language (BSL), delivered in their first language and in the moment. That's why we built SignStream™️, a cutting-edge sign language translation API from Signapse.

Whether you're streaming live video, running an event, or building the next-gen chatbot, SignStream translates English text into BSL in under 40 seconds. It's fast, scalable, and built for dev teams who care about inclusion.

In this post, we will break down how SignStream works, why it's different from anything else on the market, and how you can integrate it into your platform in minutes.

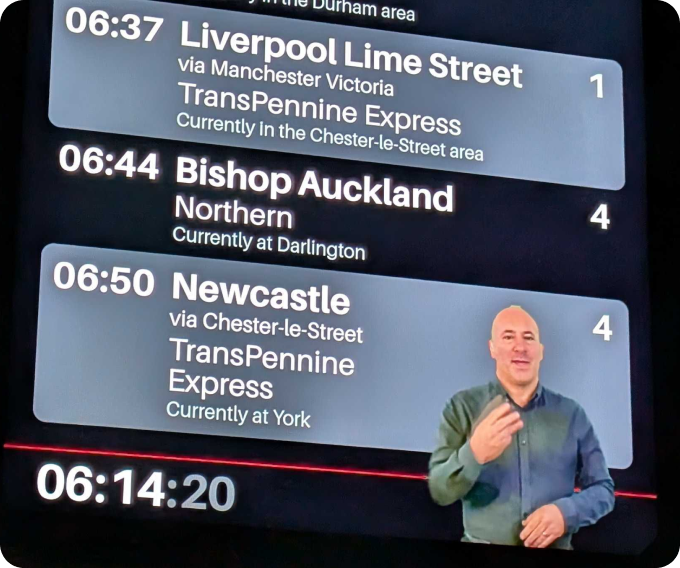

Signapse aims to improve accessibility for the Deaf community through technology. We've already implemented our AI technology to enhance accessibility in transport and post-production video.

We're thrilled to announce the launch of SignStream™️, our unconstrained sign language API. SignStream translates any sentence into British Sign Language (BSL) in 20 seconds or less. This blog will explain how the SignStream API works and how to implement it in your application.

Want to see instant BSL translation in action?

Try the SignStream demo app today! Type up to 20 words and get a real-time British Sign Language video from Rae, our AI digital signer.

The SignStream API is built with developer experience and flexibility in mind. It works seamlessly with any text-providing application. Here are some example use cases:

- Live video streaming

- Live events

- Chatbots

- Website accessibility

- Realtime announcements

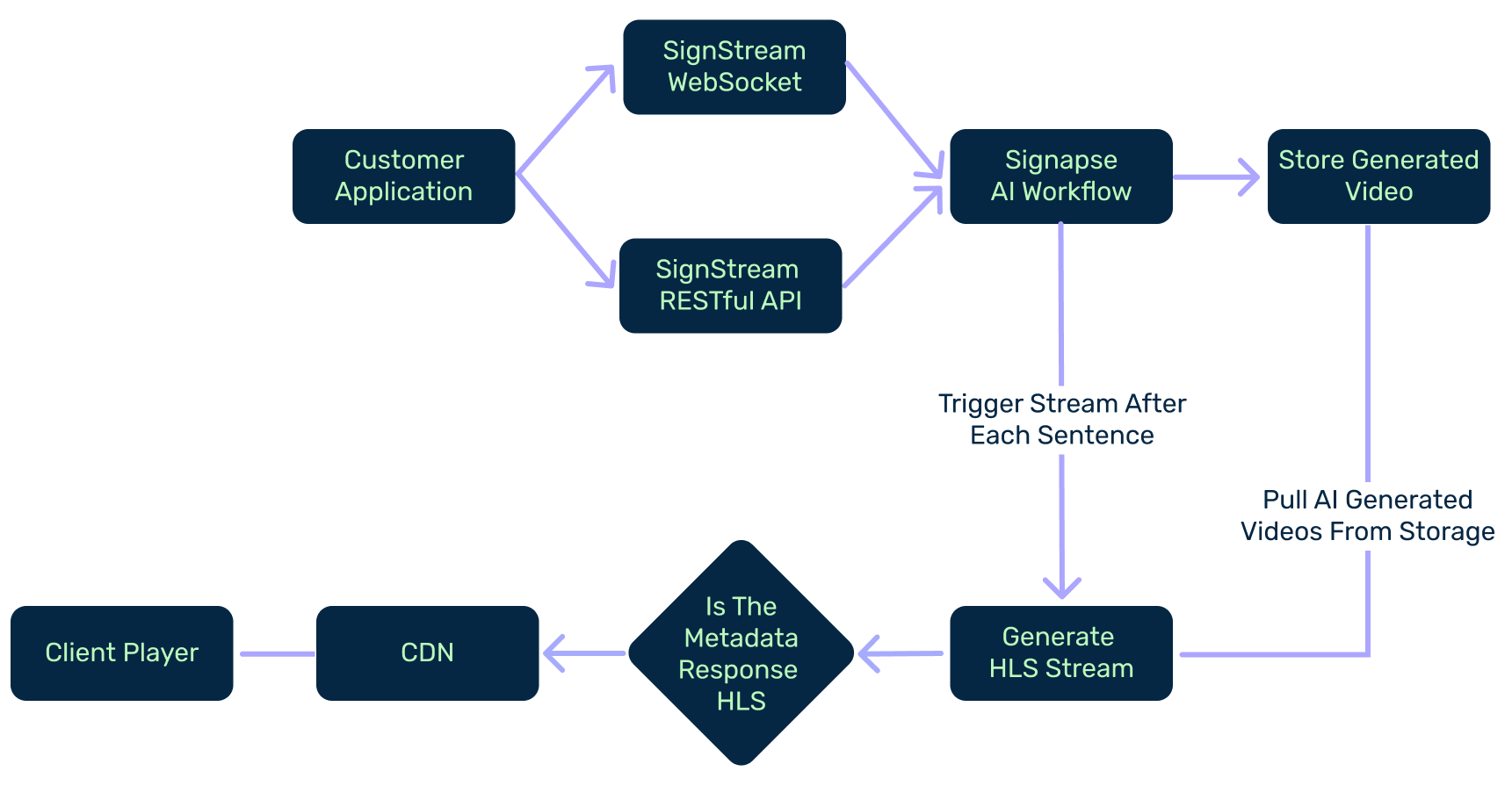

Let's examine how SignStream works with this high-level flow diagram.

Let’s walk-through the above diagram:

1. Input from the Client: The process begins with a sentence from the customer's application, such as a chat message or video captions.

2. SignStream API: The sentence is sent to the SignStream API, which creates a translation session, validates the format, and sends the text to Signapse's AI workflow.

3. Translation Pipeline (Text → Gloss → Video): Inside the AI workflow, translation happens in three steps:

- The English text is converted into BSL gloss (BSL grammar and structure)

- The gloss is used to generate a signing script

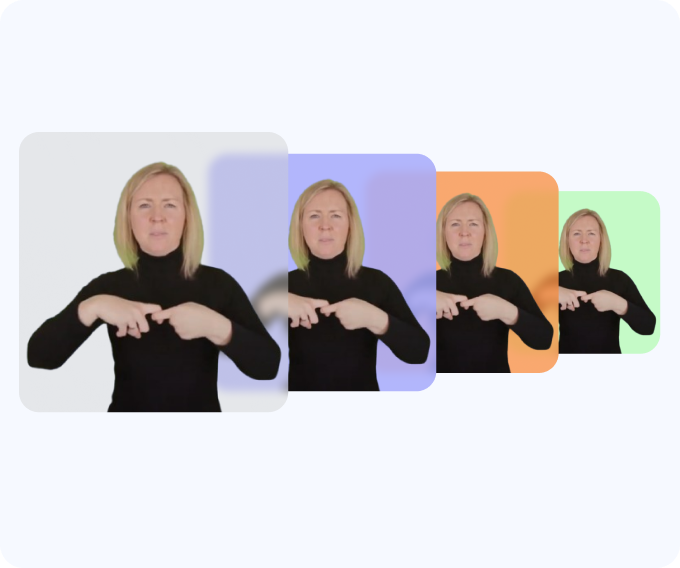

- The script is sent to Rae, our AI signer, to create a BSL video

4. Store the Generated Video: Each generated video is stored in AWS S3 for later processing.

5. Final Processing: After generating the AI video, we perform final processing to adjust output frames per second (fps), video quality, and video size based on customer requirements. We also return the translation either via the existing WebSocket connection or via a content delivery network (CDN).

6. Output: BSL Video Appears Instantly: The client displays the BSL video. The whole process happens in real-time, taking just seconds to complete.

Why do we need sentences? SignStream processes complete sentences because they provide essential context for accurate BSL translation. British Sign Language relies heavily on context, something that would be lost if we translated individual words. By working with full sentences, we can maintain meaning and produce more natural translations.

SignStream currently supports two ingress points: a RESTful endpoint and a WebSocket. Both can be configured to provide the translation you need.

All translations can be configured through metadata – an object sent with the request. We require some default values like responseFormat and type (RESTful only), but the rest can be configured to your needs. The metadata is returned with each request, making it incredibly useful for syncing the sign language with the main video feed or chat message.

Here's an example WebSocket request that translates the sentence Welcome and thank you for attending our conference on Deaf accessibility into BSL. We specify that we want the response via an HLS stream.

{

"action": "LiveTranslation",

"sentence": "Welcome and thank you for attending our conference on Deaf accessibility.",

"metadata": {

"responseFormat": "hls"

}

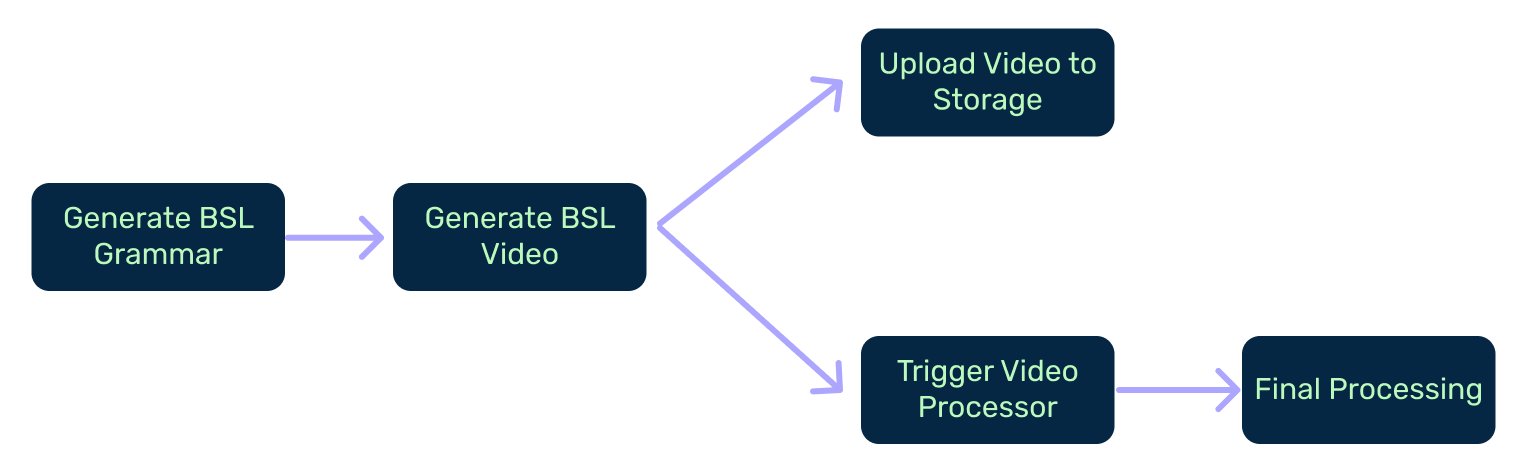

}When a sentence is sent to either ingress point, it enters the Signapse AI workflow. At the heart of this process, we combine Signapse's proprietary AI with commercial large language models (LLMs) to transform English sentences into BSL grammar. From this grammar, we generate a BSL digital signer. The system creates an SD video for each sentence and uploads it to our storage, simultaneously triggering the final processor.

Let's walk through the above diagram:

1. Generate BSL Grammar: First, the English sentence is converted into British Sign Language (BSL) grammar. This step reorganises the sentence into BSL word order, like "YOUR NAME WHAT" instead of "What is your name?".

2. Generate BSL Video: Next, the system uses the BSL grammar to generate a sign language video. Rae, the digital signer, performs the sentence in BSL.

3. Two Things Happen at Once: As soon as the BSL video is created, two actions happen at the same time:

- Upload to storage: The video is saved in a storage system so it can be used later or downloaded.

- Trigger Video Processor: An event is placed on an event stream with key translation details like metadata, video reference, etc. This event triggers the final processing to be in the correct format.

4. Final Processing: Lastly, the video processor finishes the job. This is where the video becomes ready to use; it is fully packaged and available to send to a user, display on screen, or stream online.

When the processing service activates, it performs several tasks based on the provided metadata. For example, if the responseFormat is hls, the processor converts the MP4 into an HLS manifest with associated .ts files for streaming. This processing service runs for every sentence generated by the BSL AI models, creating a continuous stream of sign language footage.

At this stage, we can also adjust the output video quality. While standard definition (SD) footage suits most applications, we also support HDR video formats for specific scenarios. We achieve this by up-mapping the AI-generated SD footage before converting it to an HLS stream.

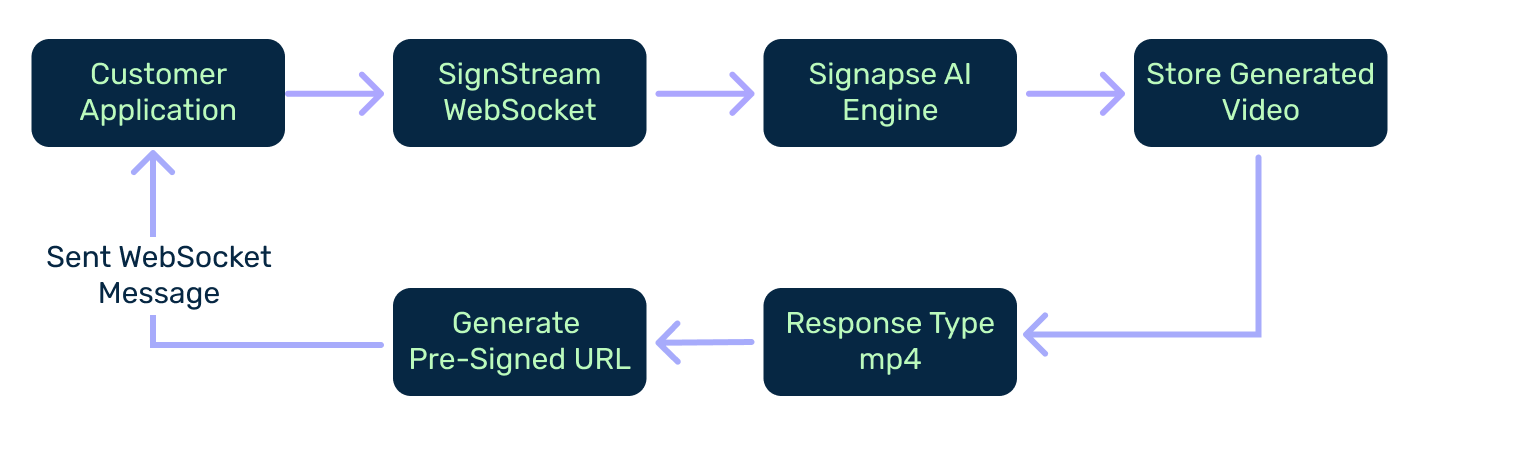

We've discussed video streaming extensively in this blog, but as mentioned earlier, SignStream is versatile and can also power web-based chat applications. Below is a high-level architecture diagram showing how SignStream works for chat applications.

Let's walk through the above diagram:

1. Customer Application Sends Message: A user sends a chat message through their app (like a chatbot or helpdesk system)

2. Message Sent to SignStream WebSocket: The message is sent to the SignStream WebSocket.

3. Processed by Signapse AI Engine: The message is passed to the Signapse AI engine. This engine translates the English message into BSL grammar, then turns it into a sign language video using Rae, the AI signer.

4. Store the Generated Video: The BSL video is saved in a secure storage system so it can be accessed later.

5. System Checks Response Type (mp4): Once the video is saved, the system confirms the file type; for example, it checks that it's an MP4 video file.

6. Generate Pre-Signed URL: Next, the system creates a secure, pre-signed URL. This link gives temporary access to the BSL video file so it can be shown inside the chat app.

7. Send WebSocket Message Back to Customer App: The pre-signed URL is sent back to the customer app through the same WebSocket.

8. BSL Video Plays in the Chat: The user now sees Rae signing the message in BSL, right inside their chat conversation.

Here's an example of the message format:

{

"action": "LiveTranslation",

"sentence": "Welcome and thank you for attending our conference on Deaf accessibility.",

"metadata": {

"responseFormat": "mp4"

}

}As you can see, this process is similar to the HLS flow, but instead of generating a stream, it returns a pre-signed URL with the completed MP4 file in SD format. This approach gives your application complete flexibility in choosing the format that works best for you.

So what's next? As our system evolves, we'll add support for more response formats. Currently, the system supports HLS direct to a player, HLS segments via WebSocket to a pre-signed URL, and MP4 delivery via a pre-signed URL. We will also be adding support for specific background colours, output formats, connection protocols and much, much more.

SignStream is more than a clever API; it's a leap forward for digital accessibility in British Sign Language. By making real-time BSL translation available through a lightweight, developer-friendly interface, Signapse is helping platforms go beyond compliance to true inclusion.

Whether you're building for live audiences, internal teams, or public services, SignStream lets you plug in instant, AI-powered sign language without the delays or cost of traditional workflows. It's accessibility at the speed of modern communication.

Let's stop leaving the Deaf community behind. Let's make accessibility automatic.

Ready to build with SignStream?

Book a demo and explore how real-time BSL can transform your digital experiences.